LC0bA4 Consider the Particular Case (short form of LC0bA3)

Consider the Particular Case *

The analysis of particular problems for the application and illumination of principles has long been a central activity in the physical sciences. The attempt to take guidance for the human sciences from the physical sciences has often been unconvincing and subject to criticism. Instead of borrowing notions from the physical sciences, I reflect here on the process of problem solving in a particular case and from that process abstract objectives, methods, and values which can help us identify and solve our own problems and judge the value of those solutions. My aim is not to develop a single, universal method from this example. It is an analysis of how we can proceed to conclusions of interest that we can have confidence in. I begin with a focus on the importance of analyzing particular cases. I make use of a particularly illuminating description by Feynman of a complex physical effect as a concrete example of a specific form of analysis. I use that worked example to illuminate the meaning of research I have done. I believe that this comparison is useful in understanding epistemological analyses based on computational modeling.

My primary source for the physics of this comparison is a series of popular lectures by Richard Feynman, published in QED: The Strange Theory of Light and Matter. [1] Feynman was a primary architect of Quantum Electrodynamics (QED) and was its advocate as the most thorough and profound of current physical theories. He approached his theme through a discussion of the reflection of light from a surface. Reflection is a familiar phenomenon generally believed to be well understood by educated laymen, both in terms of common sense (” the angle of reflection equals the angle of incidence” ) and at the academic level of classical physical theory. Feynman’s analysis shows that classical theory does not account adequately for the phenomena known today; it even reveals what one might call

” mysteries” unsolvable except through quantum analysis. Furthermore, the interpretation in terms of quantum electrodynamics permits directly the progressive deepening of layers of analysis to the level of atomic particle interactions.

There is a dilemma in classical electrodynamics. Light is both a wave and a particle. Evidence for the wave- like character of light is first, interference phenomena where light from two sources cross; and second, the diffraction of light when passing through very small holes or slits. These experimental results, refined over hundreds for years from the time of Newton’s original experiments, have been explainable only by the equations for waves interacting and ” canceling out.” The wave theory broke down in modern times when devices were developed sensitive enough to detect a single particle of light, a ” photon.” The wave theory predicted that the ” clicks” of a detector would get softer as the light got dimmer, whereas the ” clicks” actually stayed at full strength but occurred less frequently. No reasonable model could explain this fact. Feynman claims that quantum electrodynamics explains the phenomena of ” wave- particle duality” without claiming to actually ” resolve” the dilemma, that is, to reduce the explanation of the phenomena to an intuitive model based on experience in the everyday world. His description of this ” resolution” characterizes his effort as typical of the practice of physics today:

” …The situation today is, we haven’t got a good model to explain partial reflection by two surfaces; we just calculate the probability that a particular photomultiplier will be hit by a photon reflected from a sheet of glass. I am going to show you ” how we count the beans” – what the physicists do to get the right answer. I am not going to explain how the photons actually ” decide” whether to bounce back or go through; that is not known. (Probably the question has no meaning.) I will only show you how to calculate the correct probability that light will be reflected from glass of a given thickness, because that’s the only thing physicists know how to do! What we do to get the answer to this problem is analogous to the things we have to do to get the answer to every other problem explained by quantum electrodynamics… ”

QED, Introduction, p. 24

A Little More Detail

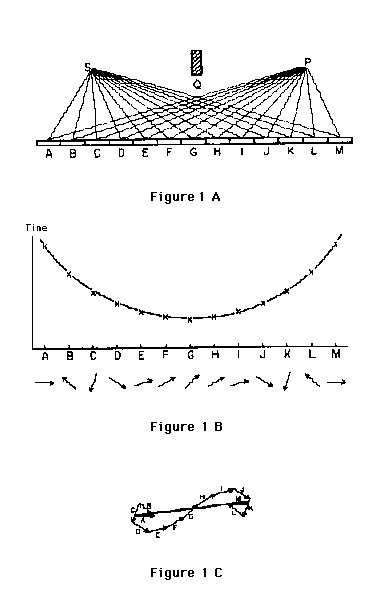

The probability that a particular photon will reflect off a glass surface is represented as a ” probability amplitude” vector, which can be called an arrow. An arrow represents, for each photon, the probability that it will reflect from the glass surface. The ” direction” of the arrow indicates in which direction the photon will move on reflection. This direction is a function of the time elapsed since the photon left the emitter. It speeds around like a very quick clock hand. Feynman uses that representation in the analysis of reflection from a mirror represented in Figure 1A. A beam of light from the source (S) spreads across the surface of the mirror and the photons that arrive at the photomultiplier (P) are represented by the paths in Figure 1A. Figure 1B graphs the time for a photon to travel the paths of Figure 1A. Figure 1C show the vector addition of the amplitude vectors for all the possible reflection paths.

What determines how long the final arrow is? Notice a number of things. First, the ends of the mirror are not important: there, the little arrows wander around and don’t get anywhere. If one chopped off the ends of the mirror, it would hardly affect the length of the final arrow. The part of the mirror that gives the final arrow a substantial length is the part where the arrows are all pointing in nearly the same direction — because their time to reach the photomultiplier is almost the same. The time is nearly the same from one path to the next at the bottom of the curve, where the time is least. Feynman concludes:

” …To summarize, where the time is least is also where the time for the nearby paths is nearly the same; that’s where the little arrows point in nearly the same direction and add up to a substantial length; that’s where the probability of a photon reflecting off a mirror is determined. And that’s why, in approximation, we can get away with the crude picture of the world that says that light only goes where the time is least…

” So the theory of quantum electrodynamics gave the right answer—the middle of the mirror is the important part for reflection—but this correct result came out at the expense of believing that light reflects all over the mirror, and having to add a bunch of little arrows together whose sole purpose was to cancel out. All that might seem to you to be a waste of time—some silly game for mathematicians only. After all, it doesn’t seem like ” real physics” to have something there that only cancels out ! … ”

Reflections on the Example from Feynman’s Analysis

Although there are limitations inherent in studies of particular cases, Feynman’s analysis shows how solving such specific problems are central to the detailed analysis necessary for illuminating the explicit meaning of general principles. One can argue that the particular problems frequently come first, and that from their solutions general principles emerge, which in turn are finally comprehensible when applied through subsequent models. Particular cases provide guidance in both the formulation and comprehension of general laws. This process seems similar to Bourbaki’s description of how a mathematician creates an axiom- based system. [2]

Learning through Interaction

It seems remarkable that something so familiar as reflection from a mirror opens a pathway into solving the deepest puzzles of physics, as in the case of wave- particle duality. And yet, why be surprised ? Insights are usually a reconceptualization of familiar affairs. Learning is familiar also, something we have all experienced personally and see in others all the time. There are psychological reasons to believe that a case- based approach is better suited to understanding the character of learning than laboratory methods.[3] Specifically, if one sees learning as an adaptive developmental mechanism, then that adaptation must comes from interaction with the everyday world. One should look where the phenomena occur. Furthermore, if learning is a process of changing one state of a cognitive system to another, then representations of that process in computing terms should be expected to be more apt than in other schemes where the procedural element of representation might be less important [4] In relating case study details to computing models, one might use those details much as one uses boundary conditions to specify the particular form of a general solution to a differential equation. Such is the use made of the psychological studies here, as a foundation for the representations used in the models, and as justification for focusing on key issues: the centrality of egocentricity in cognitive self- construction and the particularity of the naïve agent’s knowledge.

The virtue of machine learning studies is that they allow us no miracles; they can completely and unambiguously cover some examples of learning with mechanisms simple enough to be comprehensible. Must we claim that learning happens in people the same way? Not necessarily. Building such models is an exploration of the possible, according to a specification of what dimensions of consideration might be important. The computer’s aid in systematically generating sets of all possible conditions helps liberate our view of what possible experiences might serve as paths of learning. Feynman asked what happened at all those other places on the reflecting surface where ” the angle of reflection” doesn’t equal ” the angle of incidence.” Similarly, when we can generate all possible interactions through which learning might occur—including some we first imagine are not important, we can explore those alternate paths and the suite of relationships among elements of the ensemble.

Considering All the Possibilities

The analysis I choose to contrast with Feynman’s began as a verbally formulated analysis of one child’s learning strategic play at tic- tac- toe (Lawler, 1985). It was continued in a constructive mode through developing a computer- embodied model, SLIM (Strategy Learner, Interactive Model. See Lawler and Selfridge, 1986.) The latter was based on search through the space of possible interactions between one programmed agent (SLIM) having some of the characteristics of the psychological subject and a second, REO, a programmed Reasonably Expert Opponent. REO is ” expert” only in the sense of being able to apply uniformly a set of cell preference rules for tactical play. [5]

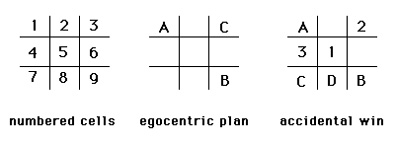

Strategies for achieving specific forks are the knowledge structures of SLIM. Each has three parts: a Goal, a sequence of Actions, and a set of Constraints on those actions (each triple is thereby a GAC). I simulated operation of such structures in a program that plays tic- tac- toe against variations of REO. Applying these strategies leads to moves that often result in winning or losing; this in turn leads to the creation of new structures, by specific modifications of the current GACs. The modifications are controlled by a small set of rules, so that the GACs are interrelated by the ways modifications can map from one to another. [6]

In order to evaluate specific learning mechanisms in particular cases, one must go beyond counting outcomes; one must examine and specify which forks are learned from which predecessors in which sequence and under which conditions of opponent cell preferences. The simulation avoided abstraction, in order to explore learning based on the modification of fully explicit strategies learned through particular experiences. [7] The results are first, a catalog of specific experiences through which learning occurs within this system and second, a description of networks of descent of specific strategies from one another. The catalog permits a specification of two desired results: first, which new forks may be learned when some predecessor is known; and second, which specific interaction gives rise to each fork learned. The results obviously also depend on the specific learning algorithm used by SLIM.

Consider how SLIM can learn the symmetrical variation to one particular fork. Suppose that SLIM begins with the objective of developing a fork represented by the Goal pattern {1 3 9} and will proceed with moves in the sequence the plan [1 9 3] (see figure 2). SLIM moves first to cell 1. REO prefers the center cell (5), and moves there. SLIM moves in cell 9. The plan is followed until REO’s second move is to cell 3. [8] SLIM’s plan is blocked. The strategic goal {1 3 9} is given over — but the game is not ended. SLIM, now playing tactically with the same set of rules as REO, moves into cell 7, the only remaining corner cell. Unknowingly, SLIM has created a fork symmetrical to its fork- goal. SLIM can not recognize the fork. It has not the knowledge to do so. What happens ? REO blocks one of SLIM’s two ways- to- win, choosing cell 4. SLIM, playing tactically, recognizes that it can win and moves into cell 8. This is the key juncture.

SLIM recognizes ” winning without expecting to do so” as a special circumstance. Even more, SLIM assumes that it has won through creating an unrecognized fork (otherwise REO would have blocked the win). SLIM takes the pattern of its first three moves as a fork. That pattern {1 7 9} is made the goal of a new GAC. SLIM examines its known plans for creating a fork (there is one, [1 9 3]) with the list of its own moves, executed in sequence before the winning move was made [1 9 7]. The terminal step of the plan is the only difference between the two. SLIM modifies thprototype plan terminal step to create a new plan, [1 9 7]. SLIM now has two GACs for future play. [9]

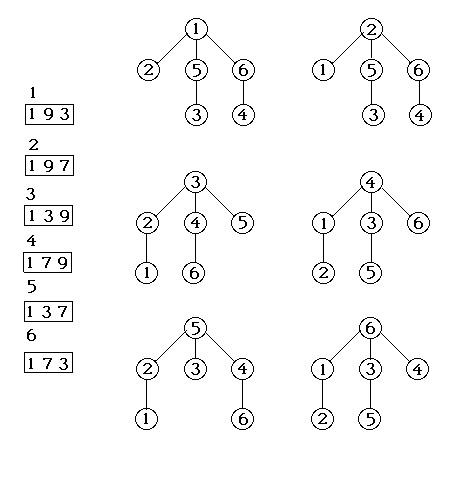

The complete set of results involves consideration of all paths of possible learning, even those deemed unlikely a priori, and concludes with the complete specification of all possible paths of learning every fork given any fork prototype. Consideration of all paths of learning I take to be comparable to consideration of all paths over which photon might travel when striking a surface. For corner opening play, the first six GACs form a central collection of strategies. Their interrelations can be represented as trees of derivation or descent (as shown in Figure 3).

Plans Learnable from the Plan Specified as the Top Node of the Descent Trees

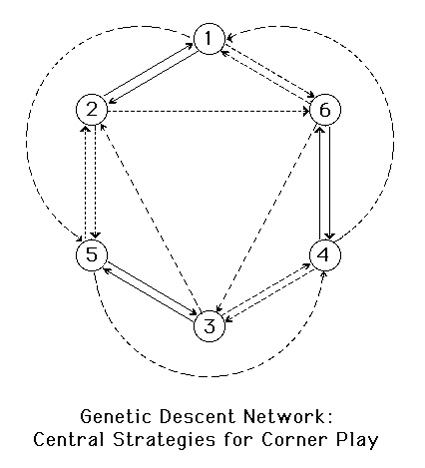

The tree with strategy three as top node may be taken as typical. Play in five specific games beginning with only GAC 3 known, generates the other five central GACs. The specialness of the six central nodes is a consequence of their co- generatability. Some of those are directly generatable, can generate each other (such as GACs 1 and 2) ; they are reciprocally generatable. Some lead to each other through intermediaries (such as GAC 1 and 3); they are cyclically generatable. For these six central strategies, the trees of structure descent can fold together into a connected network of descent whose relations of co- generativity are shown in Figure 4.

The form of these descent networks is related to symmetry among forking patterns. But they include more: they reflect the play of the opponent, the order in which the forks are learned, and the specific learning mechanisms permitted in the simulations. These descent networks are summaries of results.

Comparison and Contrast

The apparent similarities relating Feynman’s analysis of reflection and the exploratory epistemology of SLIM occur at different levels. They begin with a focus on detail:

• in the analysis of specific cases and

• in the analysis of the interaction of objects or agents with their context.

The basic principle applied in both is to try all cases and construct an interpretation of them. To predict the learning of a specific strategy by a human subject, one would need to know what strategies are already known, how the opponent’s play would create opportunities for surprising wins for the subject, and what learning methods are in the subject’s repertoire. Knowing exactly these things in the machine case is what permits examination of the epistemological space of learnable strategies.

There are many paths of possible learning, some central and some peripheral. In QED, the criterion of centrality is near- uniform directionality of the photon arrows. In SLIM, the criterion of centrality is a different and a new one: co- generativity. The basic method is to aggregate results of all possibilities in a fully explicit manner. The process of aggregation is where the differences become systematic and significant. In QED, the aggregation of individual results is formally analytic—that is the solution of path- integral equations of functions of complex variables . In SLIM, one begins with lists of games won without a plan. One then reformulates the relations between prototypes and generated plans into trees of fork plan transformations, which are the trees of descent. The learning algorithms are the functional mechanisms effecting the transformations. In the analysis of SLIM, aggregation is systematic and constructive though not formal: one pulls together the empirical results of exhaustive exploration (trees of descent in figure 3) into a new representation scheme (the genetic networks of descent in figures 4).

In general, the process followed with these data is similar to Weyl’s use of reformulation in his general description of the development of theoretic knowledge in Symmetry and Bourbaki’s description of the genesis of axiomatic systems in The Architecture of Mathematics. Working with SLIM started with the general principle that learning happens through interaction. The model was constructed to represent the behavior of both the learner and the opponent in explicit detail with specification of representations and learning algorithms giving the notion a precise meaning. Through the aggregation and reformulation of results, a new generality was achieved which suggested a new idea – – that co- generatability of related but variant knowledge forms is what makes learning possible in any particular domain. This principle would support stable knowledge in minds with reconstructive memories, such as Bartlett (1932) suggests humans have.

Furthermore, of course, QED claims completeness in covering all known interactions of photons and electrons. Strategy learning is important, but not so fundamental in cognition as electron- photon interactions are in physics. Moreover, quantum electrodynamics permits going further down in detail to the discussion of interactions of such particles in the matter.

In respect of ways for aggregating results, the similarities are superficial unless the consequences are similar or significant for some other reason. What are the results of Feynman’s analysis? Two results at least are important in their use for us. Feynman says that one must regularly remind students of the goal of the process—discovering ” the final arrow,” that is, the resultant vector of probability amplitudes, and understanding how the particularities of the interaction generate that ” final arrow.” Our objective is comparable. The learnability analysis of this paper introduces two novelties: a new goal and a new principle. The new goal transforms a psychological focus to an epistemological one. At this point one is not so much interested in what a particular child did as an individual. But the case provides some boundary conditions for modeling with the question ” if the details of at least one natural case have such characteristics, what kinds and paths of learning are possible?” [10] The question is of general interest if one admits that particularity and egocentricity are common characteristics of novice thought.

Where Feynman’s analysis of reflection depends on the principle of least time to determine what paths contribute most to the observable ” final arrow,” SLIM points to co- generativity, represented by those connections in the central group (strategies 1- 6) permitting each one to be learned no matter which is adopted as the prototype fork. Peripheral strategies [11] are rarely learned because they can be learned in few ways. The conclusion is that one can characterize the learnability of a domain as a function of particular interactions among agents based on the connectedness of possible paths of strategy learning. This is what the network of genetic descent does. That network is the equivalent of the ” final arrow” of Feynman’s analysis of reflection, despite its different appearance and different basis.

Furthermore, these methods and representations reveal what makes it possible to learn in a problem domain; even what makes it possible to judge that knowledge of a given domain is more learnable in one context than in another. The new principle is that the learnability of a domain is the result of all the possible cases of concrete learning through particular experience; those that contribute significantly to the learnability of a domain are those that are mutually reinforcing, in the sense that they are co- generative: A – > B – > C – > A, etc. This is directly comparable to what one finds the case in addition of arrows – – the final effect results from the aggregation of related components; isolated possibilities don’t add up to a significant result. The new principle – – identifying the learnability of a domain with its connectedness – – is a direct consequence of the state transformations being the learning algorithms of the system. One may paraphrase the situation thus: you’ll learn where Rome is, if all roads lead there.

A Few Final Words

Feynman jokes that it doesn’t ” seem like real physics” to add bunches of little arrows (representing the off- center parts of the mirror’s reflection) because they will only cancel out in the end. What then is the ” real physics” ? Is it a compact and simple description that one can use and rely on? Or is it a thorough consideration of all the cases, even including the improbable ones that may in special circumstances yield decisive results? The former is the result one hopes for: confidence in a succinct description that is so broadly applicable we might call it a principle or law. The latter is a process that can increase our confidence in what we know, even if the result is not so general as to be judged a ” law.”

Whitehead noted that the value of a formalism is that it lets you apply a practical method without concentrating too much on it; so that one’s attention can be given to inventing and applying methods to other problems. Knowing how to add, for example, permits us to ignore the process and to focus on the meaning or significance of elements operated on. Whitehead asserted the same is true for the calculus. It is also is important for models such as SLIM—which generates dependably a list of all relevant games playable given a prototype strategy. That list can be filtered to permit focus on some subset of games of high interest—such as those won by the player. Computational procedures are formal, but modeling as an activity is more constructive than analytic. That may make it more apt for representing what we know of learning than analytic methods. Finally, even if one cannot explain human learning at a comparable level to that at which one can explain reflection:

* Given that the principle that co- generativity under specific algorithms provides the explanation of differential learnability, one should be able to articulate why learning is possible in specific domains on the basis of the internal relationships of schemes of representations and learning algorithms, the latter seen as transformations between the states of those relationships.

This is a retreat from the Lewin’s psychology of the pure case to epistemology. Others have retreated before us and still made a contribution, as physicists did in order to ” resolve” the wave- particle duality.

Throughout this paper, the issue of whether or not simple descriptions can comprise ” real science” has continually surfaced. Different people have different criteria for judging what work is scientific and what is not. Peirce, for instance, argued that science is defined by a question of intent (generally based on a real objective of finding out what is the case in the world) and that method is derivative though still significant. Exploring epistemology through computation is clearly scientific in intent (thus, by Peirce’s criterion). It is also arguably scientific in method, as marked by its similarity to Feynman’s explication of QED. One should ask of such work not ” is it science?” as judged by some narrow criterion, but ” is it good science? Is it important science?” Ultimately, that judgment must be made on the merits of the particular case.

Notes:

[ *] This article presents parts of a longer chapter ” On the Merits of the Particular Case” appearing in Lawler & Carley, 1993.

[**] Figure 24 from p. 43 of Feynman, 1985.

[***] Figure 1 from Lawler and Selfridge, 1986.

[ 1] In the descriptions following, I attempt a succinct paraphrase of Feyman’s text in his book.

[ 2] ” …A mathematician who tries to carry out a proof thinks of a well-defined mathematical object, which he is studying just at this moment. If he now believes that he has found a proof, he notices then, as he carefully examines all the sequences of inference, that only very few of the special properties in the object at issue have really played any significant role in the proof. It is consequently possible to carry out the same proof also for other objects possessing only those properties which had to be used. Here lies the simple idea of the axiomatic method: instead of explaining which objects should be examined, one has to specify only the properties of the objects which are to be used. These properties are placed as axioms at the start. It is no longer necessary to explain what the objects that should be studied really are.” [N. Bourbaki, p. 69 in J. Fang (1970).]

[ 3] Under the banner of ” Situated Cognition” education psychologists are beginning to recognize the validity of techniques and arguments long advanced by anthropologists and case study analysts. See Brown, Collins, and Duguid.

[ 4] This position, as well as many related ideas, are set forth in a combined developmental and computational discussion in Minsky’s 1972 Turing Award paper.

[ 5] REO’s preferences are those common in tactical play: first, win if possible; next block at need; finally choose a free cell, preferring the center cell first, and then any corner cell and finally a side cell. See Human Problem Solving, Newell and Simon (1975).

[ 6] Subject to limitations based on rotational symmetry and the prototypical kinds of strategies adopted by the psychological subject, the generation of possible games was exhaustive. The analysis focused on won-games in which SLIM moved first in cell 1. See figure 2. The focus on corner opening play is based on the behavior of the subject and the relative variety of strategies such openings permit.

[ 7] This focus of the models is precisely where the egocentricity of naive thought and cognitive self-construction of the psychological subject are embodied. The models are ” egocentric” in the specific sense that no consideration of the opponent is taken unless and until the current plan is blocked. The psychological subject played this way. When a plan is blocked, SLIM drops from strategy driven play into tactical play based on preferences for cells valued by type (center, corner) and not by relation to others.

[ 8] This is one of two possible games from this juncture; both are completed in the simulation.

[ 9] This form of learning by modifying the last term of a plan is one of two sorts; the other involves generating two possible plans based on deletion of the second term of a prototype plan.

We know the forks achieved by plans [1 9 3] and [1 9 7] are symmetrical. SLIM has no knowledge of symmetry and no way of knowing that the forks are related other than through descent, i.e. the derivation of the second from the first. This issue is discussed in the longer version of this text.

[10] There are, of course, other reasons for taking seriously the detailed study of the particular case. Classic arguments for doing so appear in Lewin (1935) and are discussed in the longer version of this paper (in Lawler and Carley, 1993).

[11] Such are those defined by other GACs discussed Lawler and Selfridge (1986) and in the longer version of this paper.

References and Acknowledgements

Publication notes:

- Written in 1990-92.

- An abstract with this title was accepted for discussion at the International Conference of the Psychology of Mathematics Education, Tsukuba, Japan, 1993., Vol. III, p. 243.

- A short paper with this title, abstracted from the complete form of the paper, was published in the Journal of Mathematical Behavior, Vol. 13,, No, 2, 1994.

- The complete paper titled On the Merits of the Particular Case, appears as Chapter 1 of Case Study and Computing, Lawler and Carley, Ablex, 1996.

References:

Bartlett, Frederic C. (1932) Remembering. Cambridge; Cambridge University Press.

Bourbaki, N. Excerpt from The Architecture of Mathematics, quoted on p.69 in J. Fang (1970), Towards a Modern Theory of Mathematics. Hauppauge, N.Y.: Paideia series in modern mathematics, Vol. 1.

Brown, John S., Allan Collins, & Paul Duguid. Situated Cognition and the Cultture of Learning. Published in the Education Researcher, this paper is widely available. It is republished with commentaries from the Education Researcher in Artificial Intelligence in Education, vol. 2 (1992), Lawler and Yazdani (eds.).

Feynman, Richard 1985. QED: The Strange Theory of Light and Matter. Princeton; Princeton University Press.

Lawler, Robert W. 1985. Computer Experience and Cognitive Development. See chapter 4, The Articulation of Complementary Roles. Chichester, UK; Ellis Horwood,Ltd.

Lawler, Robert W. & Oliver G. Selfridge. 1986 Learning concrete Strategies through Interaction. Proceedings of the Seventh Cognitive Science Conference. U.C.Irvine.

Lawler, Robert W. & Kathleen Carley. Forthcoming 1993. Case Studies and Computing. Norwood, N.J.; Ablex Publishing.

Lewin, Kurt. 1935. The Conflict Between Galilean and Aristotelian Modes of Thought in Contemporary Psychology. In Dynamic Psychology: Selected Essays of Kurt Lewin, D. Adams and K. Zener (translators.) McGraw-Hill. New York; 1935.

Minsky, Marvin. (1972) Form and Content in Computer Science. Journal of the Association for Computing Machinery, January, 1972.

Peirce, Charles S. Lessons from the History of Science. In Essays in the Philosophy of Science (Vincent Tomas, Ed.). Liberal Arts Press, 1957.

Weyl, Hermann 1952. Symmetry. Princeton; Princeton University Press.

Acknowledgements:

This paper is based on work and ideas developed over many years at diverse places. It was my privilege to meet Feynman in the past when I studied at Caltech. Years later, I went to MIT to study with Minsky, directed to him by hearing from a classmate of my student days of Feynman’s interest in Minsky’s work. I undertook case studies of learning in Papert’s laboratory at MIT, guided by his appreciation of Piaget and the intellectual program represented now by Minsky’s book The Society of Mind, earlier a joint effort of Minsky and Papert. The influence of Papert, as epistemologist, remains deep here in specific ideas. On the suggestion of Minsky, I worked with Oliver Selfridge as an apprentice at computational modelling. Oliver’s contributions to this work are pervasive; the first publication about SLIM bears his name as well as my own. Bud Frawley showed me how to do the recursive game extension at the heart of SLIM. Sheldon White and Howard Gruber encouraged my case studies and showed me links between my own intellectual heroes and others (Vygotsky and Lewin). Discussion over several years with Howard Austin, Robert B. Davis, Wallace Feurzeig, and Joseph Psotka have left their imprint on my work. Arthur Miller ‘s criticisms of an early draft and suggestions for revision were always cogent and often helpful. Jim Hood, Mary Hopper, Gretchen Lawler, and Mallory Selfridge were kind enough to read early versions of this work and suggest useful directions for further development. No surprise then that the climate of MIT’s AI Lab, GTE’s Fundamental Research Lab, the Smart Technology Group at the Army Research Institute, and Purdue each made their special contribution to this paper. And if this effort reflects my admiration for one of the heroes of Caltech, perhaps it is not inappropriate to express my gratitude also to the memory of those mentors of my student days, Hunter Mead and Charles Bures, who first suggested I should take seriously the works of Langer and Peirce.